We all know that everyone makes mistakes, it's common among us all humans.

But sometimes the mistakes are really ...well how to put it… they are a little more darker than the others.

Here is list of " Big Tech Companies Fails" that are completely off the charts at dive into 5.

Amazon Made A Sexist HR Bot

One of the world's biggest companies, Amazon needs to go over for lot of applications.

We don't know about the exact numbers, but the total amount was more than enough that in 2014, Amazon gave one of their teams to make an AI capable of filtering the bad profile not fitted for the job.

Well they managed to build a working demo.

The bad news?

It automatically disregarded any resume submitted by a woman.

In order to help HRBot figure what a "good" application looked like, the development team fed it over a decades' worth of resumes, with the hope that it could learn how to identify a list of applicants that could then be passed on as per the company standard. The only problem was that the resume piles accidentally taught HRBot to weigh male candidates more favorably than females

HRBot was more thorough than merely looking at the name at the top of each resume, though If a candidate included a reference to a women's college or a women's sport team, they were disqualified.

If a candidate used language that didn't "read" male -- men are more likely to use verbs like "executed" and "captured" in resumes, for instance -- they were disqualified.

When technicians peeled back the system's code, they found it was weighing a candidate's gender characteristics more heavily than their technical knowledge or coding.

Meaning that a novice candidate could get away with knowing less than nothing about technology. Amazon tried to correct the bugs in the system, but no matter what they tried, it didn't work out.

In one of its final iterations, the team had written in so many safeguards against sexism that HRBot lost its mind and start recommending any applicant that was put in front of it.

With no guarantee of success, the project was scrapped and placed in the same forgotten storage closet as the Fire Phone and Jeff Bezos' sexts.

Google Translate Turned Into A Creepy Nostradamus

We can't think of a single occasion in the last few years when we've used Google Translate.

If you're like us, it might be wise to get back into the habit, though, because there's a wealth of evidence to suggest that this isolation is making the site, well a little insane.

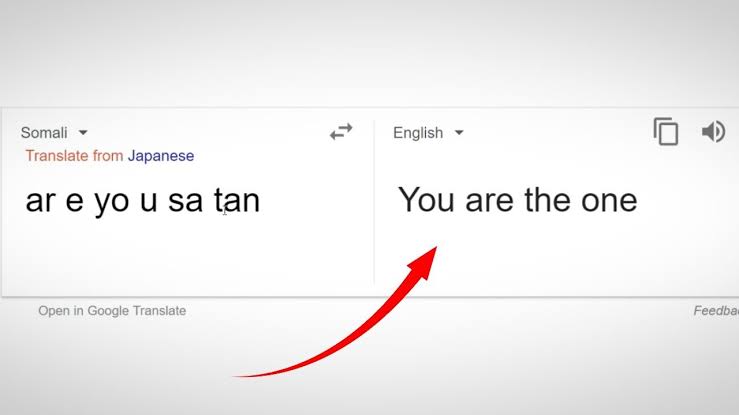

In June 2018, bored monolinguals / high-schoolers cheating on their language homework stumbled upon what at first glance reads like the opening pages of a bad conspiracy novel.

When an assortment of commonplace words like "dog" was typed repeatedly into Google Translate and converted into English, the site started spitting out vaguely sinister religious teachings.

Entering the word "dog" 19 times and converting the text from Maori to English, for instance, resulted in Translate providing this "helpful" suggestion that the poster should stop screwing around and start preparing their soul for the coming apocalypse.

Other translations were slightly less cryptic, as one user discovered when they translated "goo" (typed 13 times) from Somali to English, and were told that they should "cut off the penis into pieces, cut it into pieces."

People began freaking out about "TranslateGate" being the product of an honest-to-god ghost in the machine -- or even worse, evidence that Google was using the contents of people's email inboxes to train the electronic brain that makes Translate tick.

But when smarter people got their shot at looking things over, they suggested that the ominous messages were the result of Google's neural network struggling to make sense of the nonsensical inputs, which resulted in it giving equally nonsensical outputs.

Add in the fact that Translate was likely taught Maori using a copy of the Bible, and suddenly this mystery doesn't seem quite so spooky.

YouTube Thought The Notre-Dame Fire Was A Hoax

In April 2019, the iconic Notre-Dame Cathedral in Paris burnt to a crisp, the latest act in the Universe's long-running vendetta against the building.

The news of the fire shocked the world and caused countless people to immediately flock to YouTube, where outlets like CNN, Fox News, and France24 were streaming video of the event, live and in as much color as a sooty cloud allows.

While the world was watching, however, YouTube's sophisticated algorithms were skittering about the place and warning visitors that the stream everyone was tearfully clinging to contained copious amounts of "misinformation."

It's not quite clear what part of "This building is on fire" they considered to be fake news, but YouTube was so sure that it soon also started suggesting that people check out the Wikipedia article for September 11.

You know, to flex about what a real disaster looks like.

Now, I can sit and watch a live stream of Notre Dame burning while YouTube's fake news widget tells me about 9/11 for some reason.

Facebook Thought An Indonesian Earthquake Needed The Ol' Razzle Dazzle

We know that Facebook has been through some stupid-bad stuff during, oh, the entirety of its existence, but the law of averages states that at some point, the site has to do something good purely by accident.

This is not that story.

In the aftermath of the earthquake that struck the Indonesian island of Lombok in August 2018, killing 98 people, worried friends and relatives of the locals flocked to Facebook to inquire about people's safety and generally spread messages of hope.

A lot of these messages included the word "selamat," which depending on context can mean either "safe" or "congratulations."

“Congrats” in Indonesian is “selamat”. Selamat also means “to survive.”

After the 6.9 magnitude earthquake in Lombok, Facebook users wrote “I hope people will survive”. Then Facebook highlighted the word “selamat” and throw some balloons and confetti.

After everyone clicked "Share," they were greeted with their post and a carnival of digital delights that included balloons, confetti, and a whole lotta party vibes.

Suffice to say, no one was impressed.

When Facebook was called to account for the bad-taste attempt by its algorithm to lighten the mood, a spokesperson said that this was an error that would never be repeated again.

The animation that triggered when someone wrote "congratulations" was turned off in that area forever, while the culprit algorithm was told to machine-learn how to read a room.

Post a Comment